Who will validate my model? How to apply peer review to Data Science projects

“Two heads are better than one”. This traditional aphorism reminds us that solutions that are assessed by several people are more convenient than those based on a single opinion. A critical phase in the field of academic research is also based on this idea. The so-called peer review process concerning the evaluation of the suitability of a manuscript for publication. This process is carried out by experts in the field and serves the function of ensuring standards of quality, thereby improving performance and providing credibility.

The application of Data Science and Artificial Intelligence techniques in industry has many similarities with academia in that part of the work is based on experimentation. At BBVA AI Factory, we are more than 40 professionals including data scientists, engineers, data architects, and business experts working on a diverse number of projects relating to Natural Language Processing projects, predictive engines, alert optimization or fraud detection systems, among others. In order to improve quality, and thus make systems more robust and carry out internal audits, we have developed a methodology inspired by peer review within the academic community.

This methodology also fulfils a second purpose: to favour the transfer of knowledge and participation in a more active way in different projects, but without becoming part of the day-to-day development. The design of this methodology has been carried out as a transversal and collaborative project, following several stages.

In a first phase, we formed a working group in which people from different teams and levels of experience defined the initial points of a review methodology that would cover the basic requirements of our projects. We didn’t start from scratch; we were inspired by large technology companies in other areas that have worked on similar proposals – for instance, AI-based system auditing1 2 -, data scientists that shared their experiences in different posts3 or non-tech opinion pieces4 5. The combination of these materials and our experience in data science projects at BBVA resulted in a first version of the peer review methodology.

In a second phase, the process was open to contributions from all AI Factory data scientists, thus collecting valuable feedback that allowed us to further refine the methodology to our needs, and develop the methodology in a collaborative way so that we all felt it as our own.

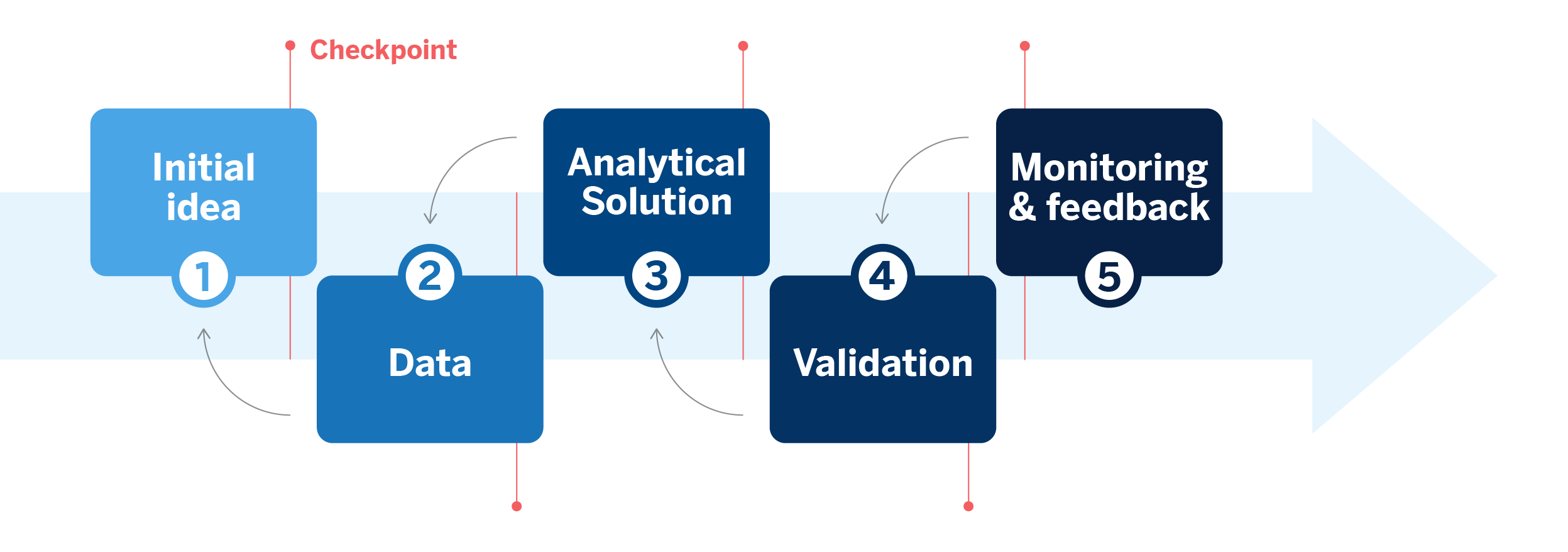

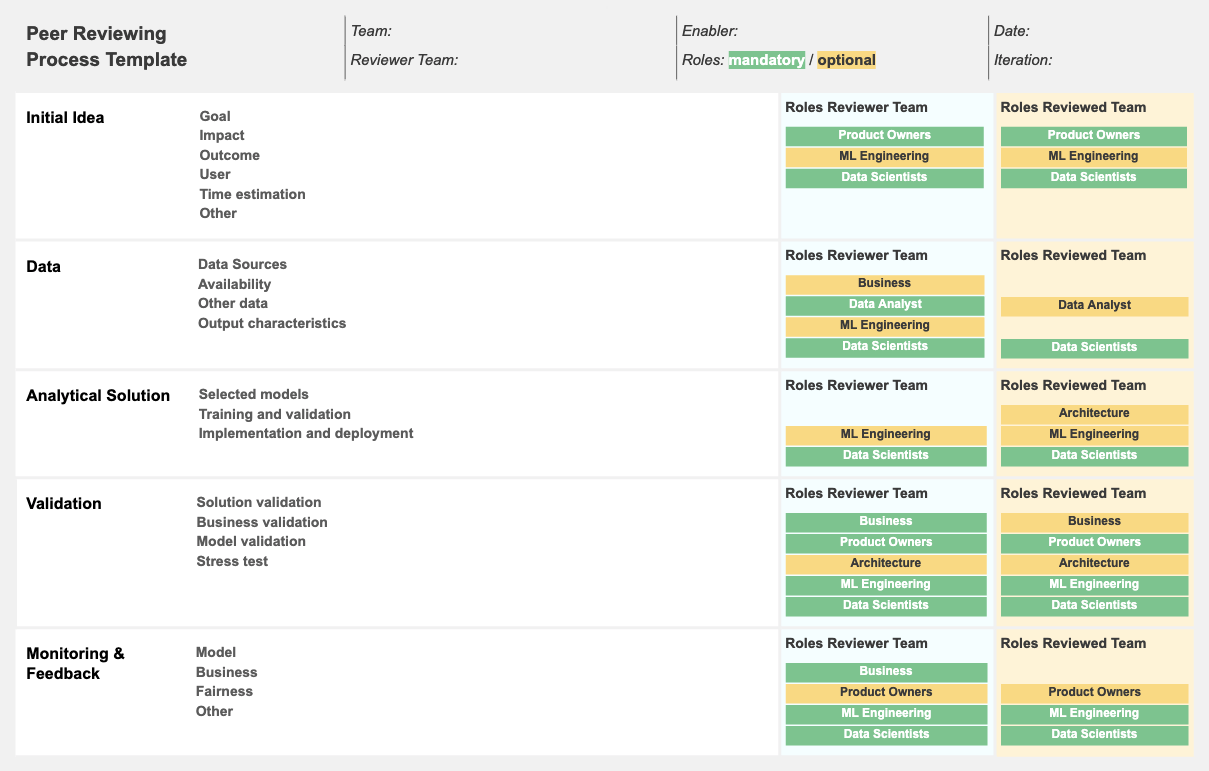

With this endeavor, a flexible and modifiable system was obtained that will be applied to all AI Factory projects over the next few months. This process divides every Data Science project into five phases: 1) Initial idea – in which the objective, scope and feasibility of the project are analyzed; 2) Data – focuses on the input and output information needed to build the solution; 3) Analytical solution – covers each of the iterations of the model development process based on the features obtained in the previous phase; 4) Validation – deals with ratifying the solution through the business KPIs and the metrics used for training/validation/test sets; 5) Monitoring – where the aim is to determine what we want to monitor once the solution is in production, and from different perspectives ie. stakeholders and data scientists.

Once the phases were established, we had to define how the peer review process would be carried out. At the start of a project, a reviewer team is appointed, led by a Senior Data Scientist and consisting of a minimum of two people. This team meets regularly with the reviewed team, who provide the necessary documentation to ensure that all decisions made throughout the five phases are understood.

These periodic checkpoints are mandatory at the end of phases one, two, three and four, and at the beginning of phase five, to ensure that everything is in order before going to production. In addition, and to make the process flexible according to the complexity of the different projects, additional on-demand reviews can be organized during the course of the different phases. At these meetings the review team asks questions and provides constructive feedback to the reviewed team. In the case of a positive evaluation, the next phase is completed and a one-pager document is written up reflecting the content and conclusions of the sessions.

At the end of the process, a final checkpoint is carried out to verify that the documentation is correct and self-explanatory, in order to decide the next steps of the project. At the same time, there is a review of the whole process.

The methodology is being introduced at BBVA AI Factory through three pilot projects that cover the wide-ranging use cases present in our company: from projects aiming at building improved embeddings of card transactions, to using graphs in financial pattern analysis scenarios. We used these pilot projects to peer review the methodology itself, collecting feedback from both reviewed and reviewer teams while also identifying improvements for future versions of the methodology.

Furthermore, we are planning to include in future versions of the methodology a set of guidelines that help us improve our ability to detect bias and therefore avoid unfairness. This way members of the reviewed and reviewer team can question the fairness of the projects and set out improvements that guarantee the impartiality and inclusiveness of our algorithms. We want our solutions to be collaborative, robust and fair by design in order to prepare ourselves for the future of banking.

References

- Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing, FAccT 2020 ↩︎

- The value of a shared understanding of AI models, Google Model Cards ↩︎

- Peer Reviewing Data Science Projects, Shay Palacy (2015) ↩︎

- A ‘Red Team’ Exercise Would Strengthen Climate Science ↩︎

- Why, How, What, In That Order: Using The Golden Circle To Improve Your Business & Yourself ↩︎