Data Products

Teaching machines to assist BBVA financial advisors

10/11/2021

The number of BBVA clients who communicate with their financial advisors through our app is increasing by the day. Here we explain how we have experimented with generative NLP models to help our advisors.

In the field of technology, experimentation and innovation are key aspects in our job. This is particularly true when it comes to developing AI-based solutions. At BBVA AI Factory we are committed to allocating specific time slots to experimenting with state-of-the-art technology and also to working on ideas and prototypes that can later be incorporated into BBVA’s portfolio of AI-based solutions. These are what we call innovation sprints.

In one such sprint, we asked ourselves how we could help financial advisors in their conversations with clients. The BBVA managers, who help and advise clients in managing their finances, sometimes search for responses to the most common FAQs within pre-defined answer repositories. This confirmed to us the potential of developing an AI system that could suggest to them possible answers to client questions and respond with a single click. The idea behind this system would be to save them time typing answers that do not require their expert knowledge, thus allowing them to focus on those that provide the most value to the client.

So we got down to work. Once the problem was defined, different ways of addressing it soon began to emerge. On the one hand, we thought of a system to search for the most similar question in the historical data to the one posed by the client, and, subsequently, to evaluate whether the answer given at the time is valid for the current situation. On the other hand, we also tried clustering the questions and suggesting the pre-established canonical answer for the cluster to which the question belongs. However, these solutions required too much inference time or were overly manual.

Eventually, the solution that proved to be the most efficient from the point of view of both inference time and the ability to suggest automatic answers to a number of questions on different topics (without carrying out prior clustering), was the sequence to sequence models, also known as seq2seq models.

¿What is seq2seq?

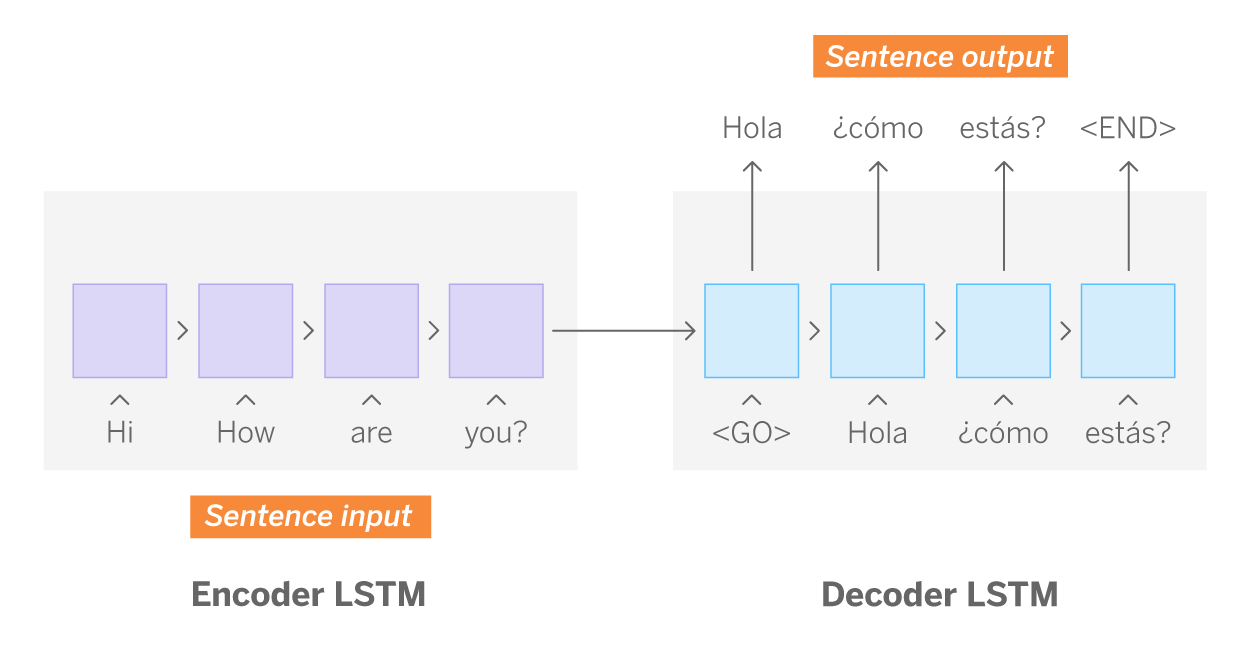

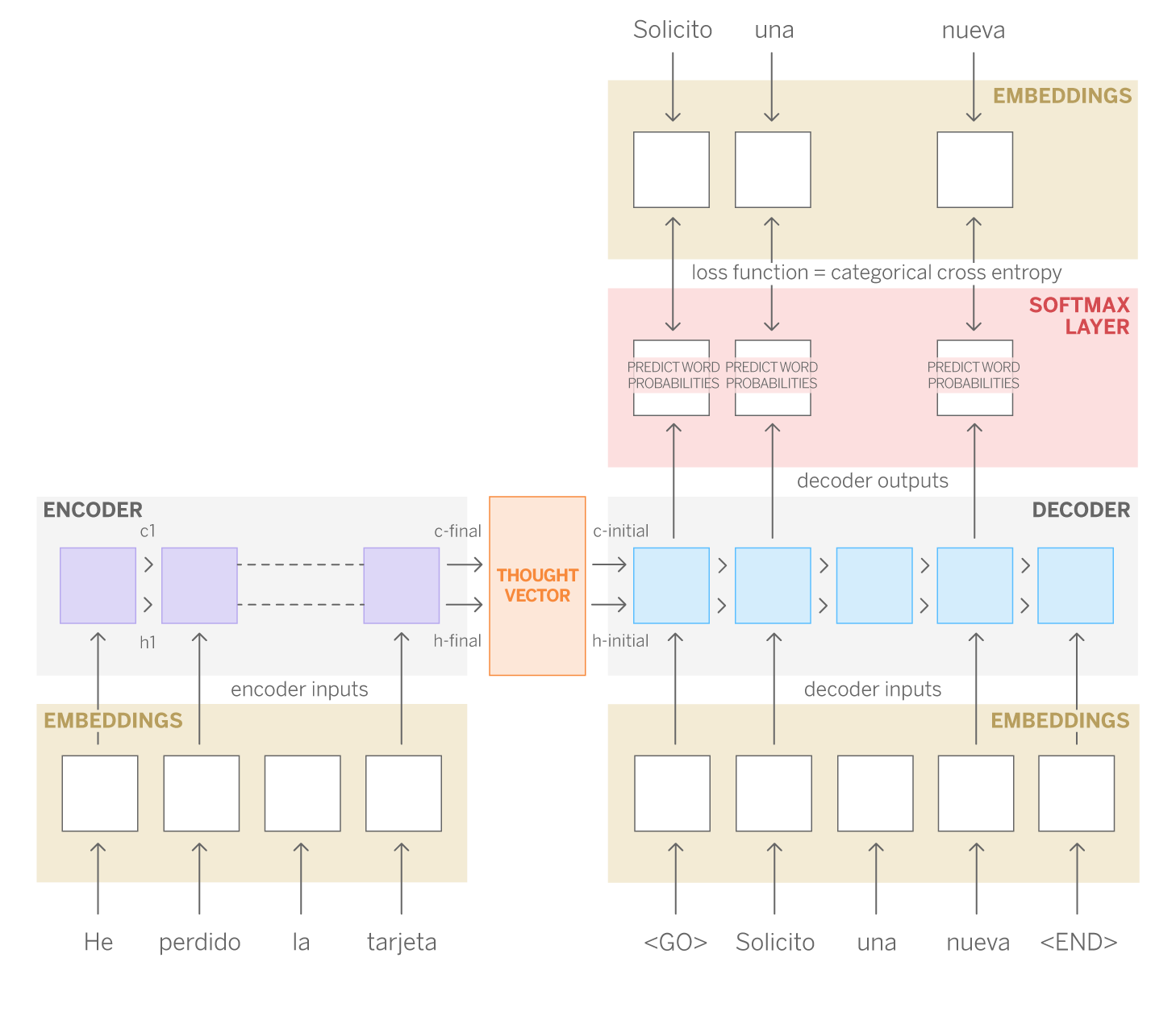

Seq2seq models take a sequence of items from one domain and generate another sequence of items from a different domain. One of its paradigmatic uses is the automatic translation of texts; a trained seq2seq model enables the transformation of a sequence of words written in one language into a sequence of words that maintains the same meaning in another language. The basic architecture of seq2seq consists of two recurrent networks (decoder and encoder), called Long-Short Term Memory (LSTM).

How have we applied seq2seq to build our answer suggestion system?

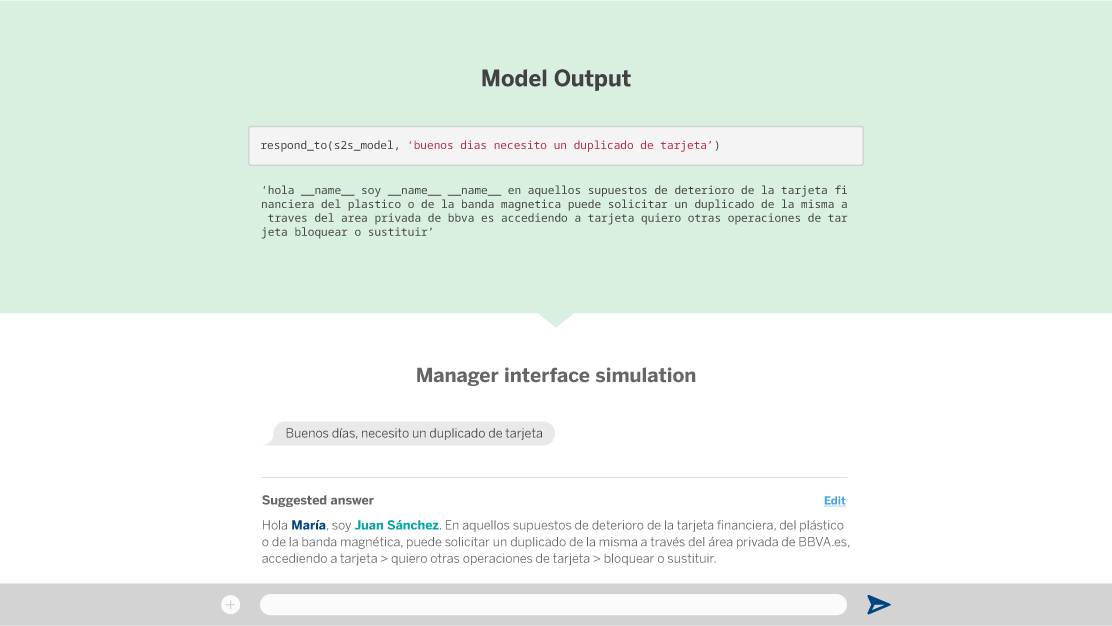

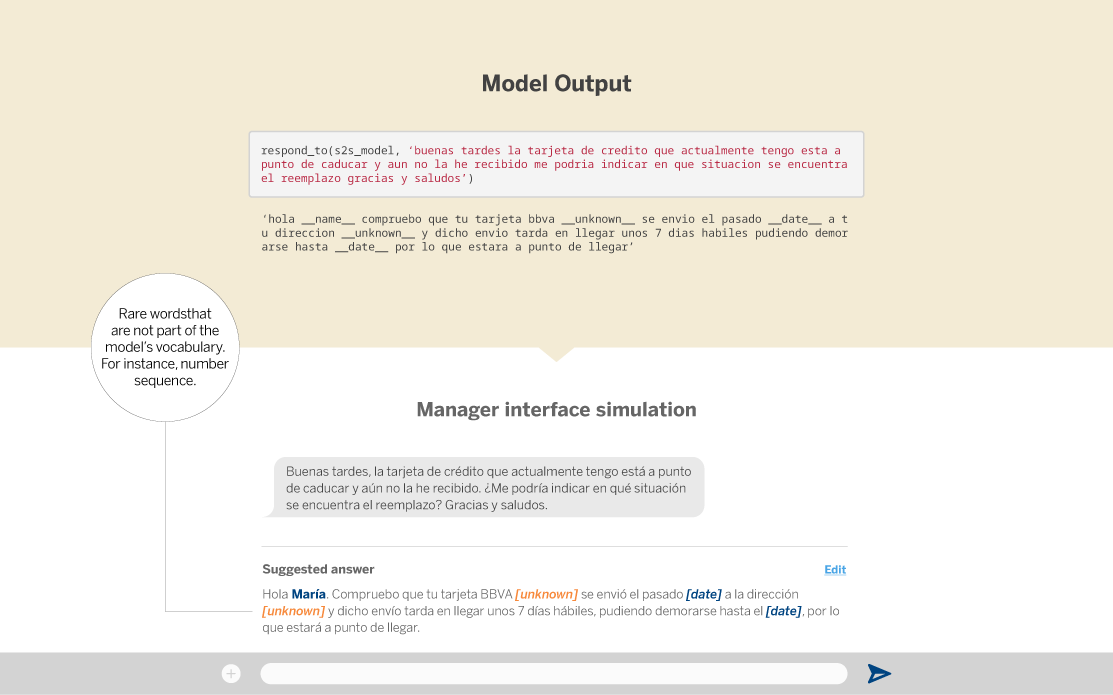

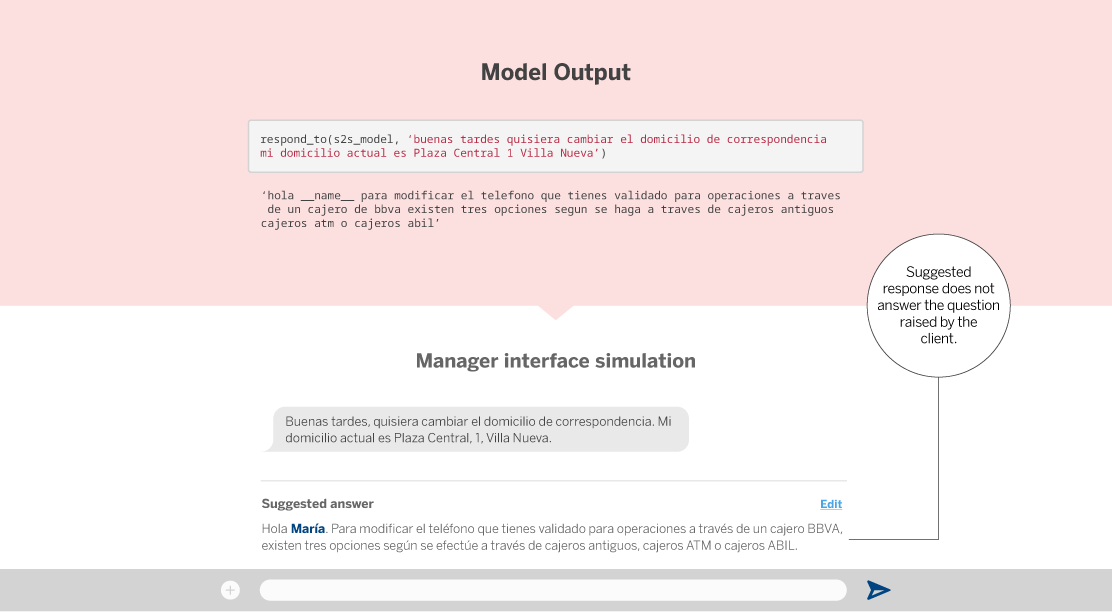

Although translation is one of the most obvious applications, the advantage of these models is that they are very versatile. Transferring their operation to our case, we could “feed” the encoder with the clients’ questions (sequence 1), and the decoder with the answers given by the managers (sequence 2). This is exactly what we did. We selected from our history more than a million short conversations initiated by the client with their manager (no more than four messages). With this dataset, we performed a classic pre-processing (lowercase, remove punctuation marks) and discarded greetings and goodbyes using regular expressions. This step is useful because one of the hyper parameters of LSTM networks is the length of the sequence to be learned. This is why, by removing this non-relevant information, we can take better advantage of the learning capacity of the model for more diverse or variable sequences, as well as reduce the computational cost of learning longer sequences. Prior to the preprocessing phase, the data arrives anonymized based on our own NER (Named Entity Recognition) library so that sensitive data such as name, phone, email, IBAN (and certain non-sensitive entities such as amounts, dates or time expressions) are masked and treated homogeneously. Consequently, the model gives them the same importance. Once the network is trained, we use some test questions (not included in the training) and manually evaluate the suggestion of our system. As we can see in the following example, the model is able to suggest a suitable answer to client questions.

How do we validate our seq2seq model?

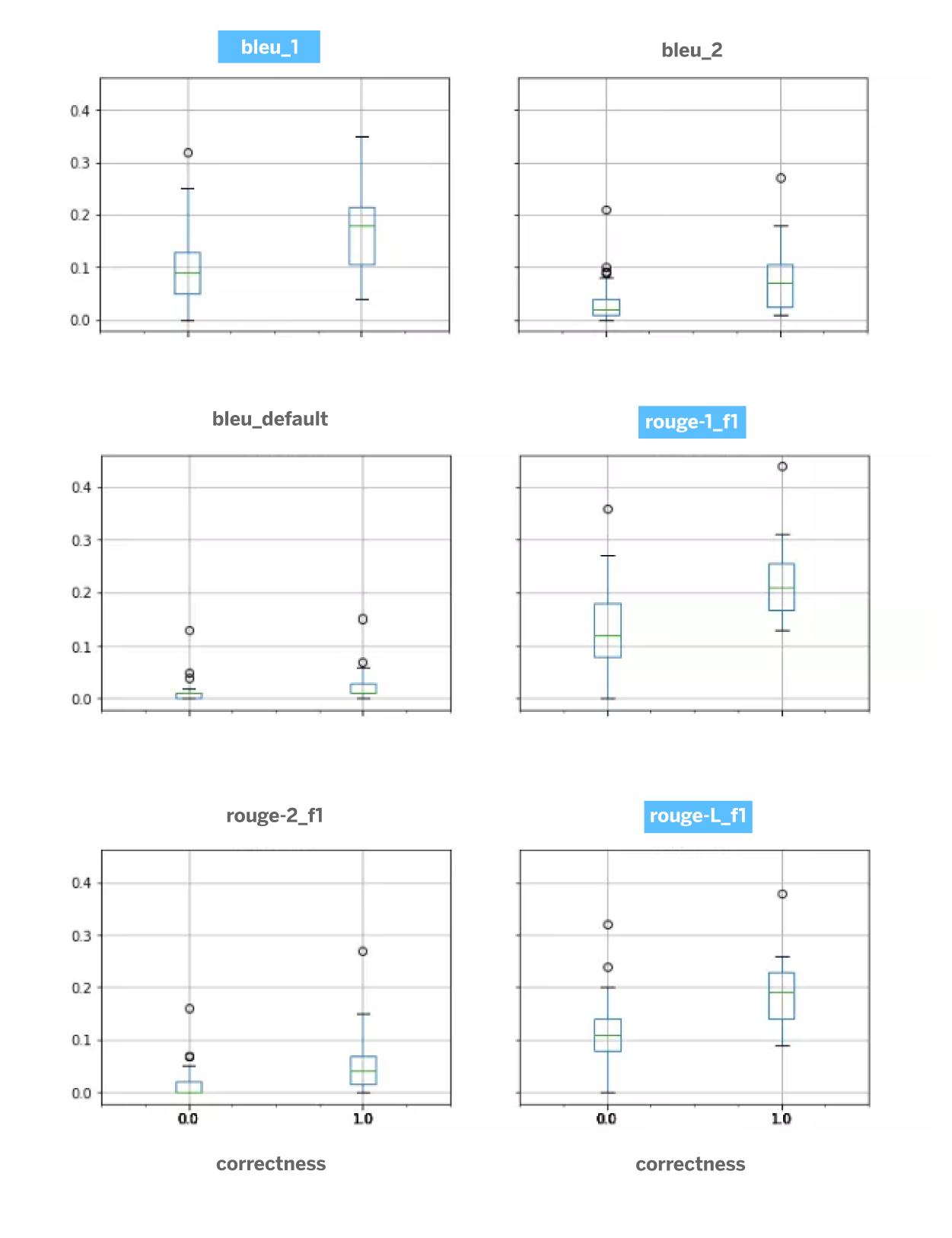

Evaluating the result of an automatically generated text is a complex task. On the one hand, it is impossible to manually evaluate a relatively large test set of questions and answers. In addition, if we wanted to optimize the model, we would subsequently need some or other method to measure the quality and appropriateness of the answers suggested by the model automatically. However, we are able to make a manual evaluation that allows us to ascertain – with a small set of messages and according to our criteria as clients1. We use two criteria for manual evaluation: answerability and correctness. The first of these qualitative metrics determines in which degree a question can be answered, in order to check what the business impact would be, and the second evaluates whether the answer suggested by the model is correct or not, which gives us an idea of the quality of our seq2seq model. With this evaluation we found that not all client questions can be solved with this approach at the moment. The main reason lies in the need to take into account contextual information, either previously provided by the client, previously commented by a specific manager or personal and financial information of the client. – which answers are appropriate and which are not. Subsequently, the correlation is calculated between our manual evaluation and the evaluation obtained by sets of automatic metrics such as Rouge, Blue, Meteor or Accuracy. The following figure represents the correlation between the manual evaluation (false: wrong answer, true: correct answer) and the values of the automatic metrics. In a first analysis we observe that bleu_1, rouge_1 and especially rouge-L are the metrics that best align with the human criteria. This is important for the optimization of the seq2seq architecture and for the automatic evaluation of the system. Although this study would require further research, we consider it sufficient for this prototyping phase.

Notes

- We use two criteria for manual evaluation: answerability and correctness. The first of these qualitative metrics determines in which degree a question can be answered, in order to check what the business impact would be, and the second evaluates whether the answer suggested by the model is correct or not, which gives us an idea of the quality of our seq2seq model. With this evaluation we found that not all client questions can be solved with this approach at the moment. The main reason lies in the need to take into account contextual information, either previously provided by the client, previously commented by a specific manager or personal and financial information of the client. ↩︎