Fairness by Design in Machine Learning is Going Mainstream

This week the Harvard Business Review published a story authored by the leading forces behind a health analytics project that is using Deep Learning to detect people who are at risk of cardiovascular disease.

The researchers behind the Stroke Belt Project have found some useful tips for those who want to avoid socio demographic, racial, or any other type of human bias introduced by individuals either preparing the data, building the model, or optimizing outputs.

In an effort to promote “Fairness by Design”, a concept that we, at BBVA Data & Analytics, try to have in mind when modelling and sampling data, they prescribe some pieces of advice:

- Pair data scientist with social scientist to introduce a humanist perspective to the data and the solution to be implemented.

- Make data scientist aware. Those responsible of preparing the data that is going to feed a machine learning model should be made aware of their own bias.

- Measure fairness. By utilizing metrics to measure fairness one can correct bias.

- Focus not only on representativeness. Once fairness measures are in place find a balance between representativeness of future cases and underrepresentation of minorities.

- Keep debiasing in mind and if necessary, avoid sociodemographic categorization altogether.

Fairness is one of the main concepts that BBVA Data & Analytics has been striving to introduce into financial modelling. We recently published a paper “Reinforcement Learning for Fair Dynamic Pricing (Maestre, 2018)”, concerning the importance of integrating fairness as the main design principle when relying on Artificial Intelligence to prepare financial projections (See Section B of the paper):

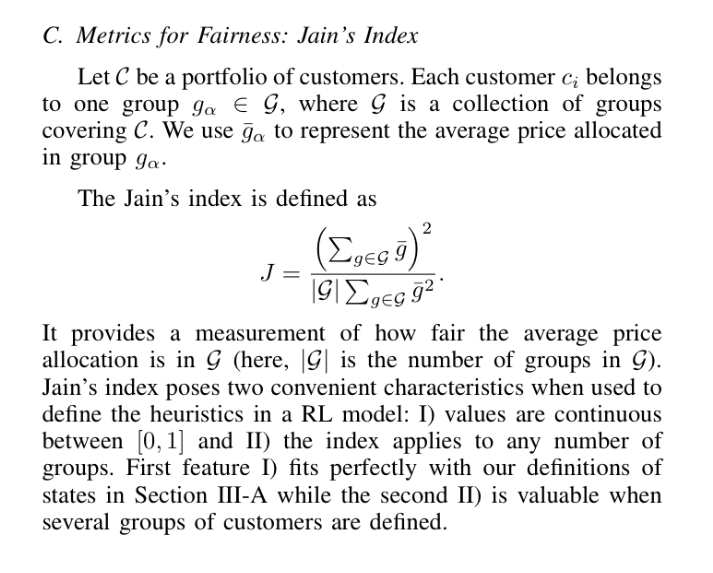

Moreover, we have proposed metrics to quantify fairness, as the HBR article points out (point 3 in HBR article):

We have also authored several articles, attended conferences to talk about this topic and help introduce this debate in the digital transformation that BBVA in undergoing. A special attention on groups segmentation must be paid (points 4,5 in the HBR article). We try to achieve this with our client2vec models.

As underlined in the aforementioned HBR article, introducing “Fairness by Design” is not a drag for innovation, but a way of making advanced analytics more solid, more reliable and with better result across different socio demographics segments.